Following Carolina Leal’s interview with Prof De Wet Swanepoel on the HearScope system, Spotlight on Innovation asked Dr Jenny Nesgaard Pedersen to review the new AI feature in HearScope system’s capabilities.

In the last decade, artificial intelligence (AI) and machine learning have been buzz-words popular in the media. We have seen the benefits of advances in this area offering user adaptation, enhancing the online experience for search engine requests and online shopping. Large corporations such as Facebook and Amazon employ neuroscientists as part of their investment into AI.

Artificial intelligence refers to machines or computers mimicking cognitive functions that humans associate with the human mind such as learning and problem solving [1]. In the world of audiology and ENT, we have seen the introduction of AI in the area of hearing aid design and feature functionality – striving to provide end users with a transparent and seamless listening experience by predicting and mimicking the decision process of the end user for a given listening condition.

The latest AI offering is aimed at improving access to ear care in the underserved areas of the world.

The problem

Most regions globally are experiencing an access barrier when it comes to ENT and audiological services [2]. The global impact of the shortage of audiologists and other hearing healthcare personnel is substantial. Over 90% of the world’s population experience limited or absent access to audiology and ENT services. By virtue of its impact on communication, literacy, and employability, hearing loss is a major risk factor for poverty; conversely, persons living in poverty with greater exposure to disease and poor hygiene and limited access to healthcare are more likely to have a hearing loss. Otitis media is the second most common cause of hearing loss and is the most common childhood illness, especially in low- and middle-income countries [3]. The effects of untreated otitis media can be permanent hearing loss, illness secondary to the infection and, in some cases, death [4].

The introduction of telehealth is a possible solution to the access barrier. Telehealth allows one hearing care professional to service larger populations remotely and in an asynchronous manner. Community-based health workers gather audiological data through screening equipment built for purpose, and an audiologist can look over the results and recommend a treatment plan for each patient. Studies have been carried out to investigate the feasibility of this approach, and it was found that a group of seven healthcare workers, with minimal training, could test 100 children each hour [5].

This model does however have limitations when it comes to identifying the presence of active infections, perforations, and other contraindications in the field, prior to performing the hearing test. In the hands of a community health worker with little to no audiology training, these conditions may be missed or overlooked. This will have an impact on the screening test results gathered but could also pose risks for cross-contamination between patients.

Recent research has been published looking into the use of AI in accurately classifying otitis media and other conditions. It was found that image processing could automatically classify otoscopy images at an accuracy of 78.7% for images captured on-site with a low-cost custom-made video otoscope, compared to 80.6% accuracy achieved for images taken with commercial video otoscopes [6]. This smartphone- and cloud-based automated otitis media diagnosis system was further improved with the addition of a neural network used for classification of the ear canal and tympanic membrane features (vectors).

The system can be operated from an Android smartphone using the downloadable app and an otoscope that connects to the smartphone. Published results on this improved system demonstrated 87% accuracy in diagnosis. The system “allows the user to load a video otoscope-captured tympanic membrane image in an Android application, and pre-process the image on the smartphone, after which the pre-processed image is sent to a server where feature extraction and classification/diagnosis is performed” [7]. The diagnosis is then returned to the phone and displays it to the user.

This system recently completed the research and development phase and has been made available to the public in a beta version

The HearScope system

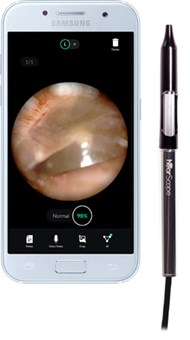

The HearX group launched the HearScope system with an AI add-on in a beta version in March of 2020. The system offers a low-cost smartphone-based video otoscope that is used to capture images and video of the ear canal and tympanic membrane.

The AI function gives an image classification within seconds, classifying the image using the most common ear disease categories.

HearScope app (displaying a normal tympanic membrane) and video otoscope.

Image courtesy of HearX Group.

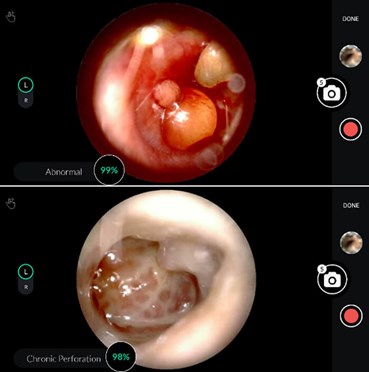

HearScope app displaying abnormal otoscopy results, classified, and with confidence level.

Image courtesy of HearX Group.

The HearScope system employs two AI systems in classifying otoscopy images. The first screens whether the image is of the ear canal and tympanic membrane. If an ear canal is confirmed, the second neural network classifies the images in one of four categories: normal, wax obstruction, chronic perforations and abnormal. These classifications are based on a large database of images diagnosed with the same category by at least two ENTs, which serves as the ground truth data. The abnormal category consists of a number of other specific conditions, such as acute otitis media, that require treatment and can therefore triage patients accordingly. As the ground truth database increases, more specific diagnostic categories will be supported for classification purposes.

Initial studies found the performance of the automated otitis media diagnosis system to be comparable, and in cases, superior to the diagnostic accuracy of medical specialists or otologists using handheld otoscopes. The average diagnostic accuracy of paediatricians is around 80%, that of GPs is 64–75%, and that of ENTs 73%. The decision tree classification performance was 81.58%, while it was 86.84% when the neural network was used [7]. The current system that has been released has a 94% accuracy for classifying images into the four currently supported diagnostic categories.

The system is cloud based and therefore does need an internet connection to return a diagnosis. However, the system can be used in an asynchronous manner, saving the images locally within the HearScope application and uploading them to the cloud once an internet connection is available.

This use of AI is a world first and looks promising for improving access to diagnostic expertise in areas where audiology and ENT services are in short supply, or often entirely absent. For markets with good penetration of audiology services, the offering is an affordable way to introduce video otoscopy into the practice. And for practitioners working alone without colleagues to spar with, the AI classification feature offers a reassuring second opinion, cross-checking the clinician diagnosis.

For more information, visit: www.hearxgroup.com/hearscope

References

1. Russell S, Norvig, P. Artificial Intelligence: A Modern Approach (3rd Edition), USA; Pearson; 2009.

2. Fagan JJ, Jacobs M. Survey of ENT services in Africa: need for a comprehensive intervention. Global Health Action 2009;2:1-7.

3. Vos T, Barber RM, Bell B, et al. Global, regional, and national incidence, prevalence, and years lived with disability for 301 acute and chronic diseases and injuries in 188 countries, 1990–2013: a systematic analysis for the Global Burden of Disease Study 2013. Lancet 2015;386(9995):743–800.

4. Monasta L, Ronfani L, Marchetti F, et al. Burden of disease caused by otitis media: systematic review and global estimates. PLoS One 2012;7(4):e36226.

5. Jayawardena ADL, Nassiri AM, Levy DA, et al. Community health worker‐based hearing screening on a mobile platform: A scalable protocol piloted in Haiti. Laryngoscope Investigative Otolaryngology 2020;5(2):305-12.

6. Myburgh HC, van Zijl WH, Swanepoel D, et al, Otitis media diagnosis for developing countries using tympanic membrane image-analysis. EBioMedicine 2016;5:156-60.

7. Myburgh H, Jose S, Swanepoel D, Laurent C. Towards low cost automated smartphone- and cloud-based otitis media diagnosis. Biomedical Signal Processing and Control 2018;39:34-52.