Hearing aid manufacturers’ main focus has, up until recently, been improvement of speech intelligibility. Today’s hearing aid users have much broader demands however and often cite improved music perception as a key outcome or goal. Drs Tish Ramirez and Rebecca Herbig from Signia outline the changes in technology that hearing aid manufacturers are implementing to try to address this need.

The primary goal of hearing instruments and the typical hearing aid fitting has always been to optimise speech intelligibility in a variety of situations. As a result, hearing aids have always been designed to process speech sounds, while the processing of other sounds, including music, is secondary. In more recent years, even when a dedicated music program is offered, it often simply entailed less compression and a broader frequency shape. Nevertheless, these so-called dedicated music settings were still confined within an instrument optimised for speech.

Growing demand for better performance with music

With the rapid advancement of hearing aid technology, modern hearing aids have reached an acceptably high level of performance for delivering speech understanding. Binaural beamforming technology and advanced noise reduction algorithms have proven effective, even for listening in noisy situations that have always been challenging for hearing aid wearers. In fact, there are now hearing aids that have been clinically proven to allow wearers to understand speech better in challenging environments, such as cocktail parties and busy restaurants, than even their normal hearing counterparts [1]. However, we are now seeing a trend where the performance of hearing instruments in non-speech related scenarios, such as music, has become increasingly important. In one recent analysis, Varallyay et al found the number of professionally published articles related to music has increased, while the number of articles related to speech has decreased [2].

Optimising hearing aids for speech vs. music

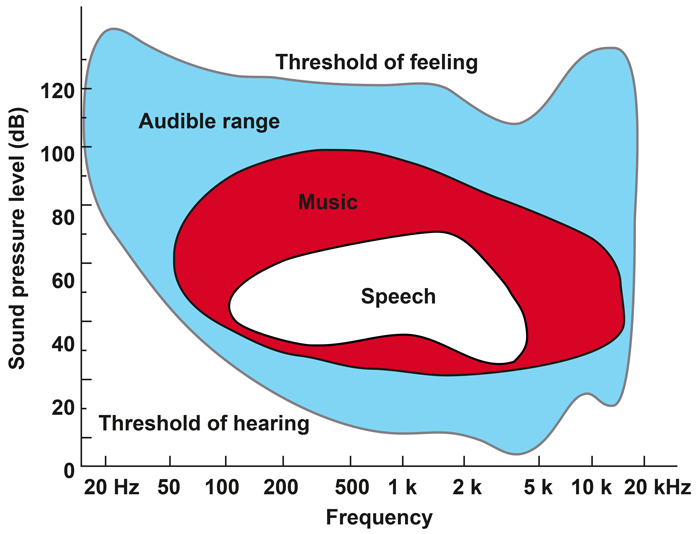

Before we discuss the ways hearing aids can be optimised for the enjoyment of music, it is important to understand the difference between speech and music signals. Compared to speech, music covers a much wider dynamic and frequency range. Varallyay and colleagues suggest that if the area covered by speech sounds in the frequency-intensity visualisation of the human audible range is referred to as the ‘speech banana’, then the range of music sounds could be referred to as the ‘music watermelon’ (Figure 1). Therefore, in order to capture the full range of music, hearing aids need to be able to collect, process, and put out a wider intensity and frequency range with fidelity than is necessary for speech sounds.

Figure 1. The frequency-intensity visualisation of the human audible range

(adapted from Varallyay, et al.)

Wearer requirements for listening to music are very different from speech. As such, maximising hearing aid sound processing for the better enjoyment of music is also different from the goal of maximising speech intelligibility. When working with speech, compression serves to make soft speech audible and louder speech comfortable. However, in music, less compression is desirable to maintain the spectral contrast.

When speech is the focus, all other sounds should be suppressed to provide as clean a signal as possible for the wearer. Directional microphones and noise reduction algorithms have proven to be very effective to achieve this end. In order to have a surround sound effect when listening to music, better sound quality is often achieved when hearing aids approximate the natural directionality provided by the pinna effect. Similarly, since noise reduction algorithms can easily mistake components of music as noise, it should also be disabled for this listening situation.

Beyond optimising hearing aid processing for music, the listening situation where music enjoyment takes place must also be considered. Most hearing aids offer specialised programs for different speech situations, such as when outdoors or when in noise. These programs trigger specific hearing aid settings to meet wearer listening requirements in these situations. By the same token, it is simplistic to assume that a single music program can provide the best listening experience for music at all times. By differentiating the environments in which music listening is taking place, we can fine-tune hearing aid settings even more specifically to maximise the wearer listening experience.

Designing hearing aids for music and music lovers

As wearers are demanding better solutions for listening to music, manufacturers are also working toward this goal. Select hearing instruments on the market now offer an extended frequency range up to 12kHz and an extended dynamic input range well above 110dB. These improvements allow the hearing aid to accommodate the larger frequency and intensity range of the ‘music watermelon’.

Manufacturers like Signia are also developing solutions for musical enjoyment in three distinct listening environments:

- Listening to recorded music.

- Listening to a live musical performance.

- Playing a musical instrument.

These three music programs share some similarities, such as disabled digital noise reduction settings and situation-specific frequency shaping and compression optimised for music, but they also have notable differences.

The recorded music program engages TruEar, a microphone mode designed to simulate the directional properties of the outer ear for BTE and RIC instruments. This helps create a more natural, surround sound effect. Since the wearer would be in the audience facing the stage, the live music program activates directional microphones to pick up the performance at the front rather than the crowd from behind. The third program option is designed for musicians. This program focuses on preserving the natural dynamics of the music so performing musicians can better gauge the loudness of their own instrument or voice in relation to other musicians performing with them. TruEar mode is applied here as well since the musician could be positioned anywhere on stage and needs to hear from all directions.

Summary

Because the frequency and intensity range of music is significantly greater than that of speech, and because listener requirements for music are different from speech, hearing aids need to be redesigned to offer a satisfactory music listening experience. Besides expanding the frequency response and dynamic range of the hearing aid to capture the wider dynamic range of music, the listening situation in which music enjoyment takes place should also be considered when designing dedicated hearing aid processing for music. Manufacturers like Signia are developing technology that goes beyond improving speech intelligibility, to help satisfy the listening requirements of music lovers and audiophiles alike.

References

1. Powers T, Froehlich M. Clinical Results with a New Wireless Binaural Directional Hearing System. Hearing Review 2014;21(11):32-4.

2. Várallyay G, Legarth SV, Ramirez T. Music lovers and hearing aids. AudiologyOnline 2016:Article 16478. Retrieved from www.audiologyonline.com.

Declaration of Competing Interests: Both authors are currently employed by Sivantos Inc, which is the manufacturing company for Signia branded hearing aids.