Dining with family members, amongst the clinking of dishes and glasses, the sounds of conversations and laughter, the husband, a user of hearing aids, misses his wife’s request to bring another bottle of wine. After a third try, the wife, who has normal hearing, complains, “Where are your ears?” And the husband says, “I can hear you but I don’t understand because you mumble!”

Many hearing-aid wearers ask: Why do I hear but not understand? Extracting information from speech relies both on hearing the words and understanding the meaning [1]. In the above example, the husband’s difficulties might have to do with both issues. The first issue is inadequate hearing. The husband might perceive speech as mumbling due to an inability to segregate his wife’s voice from background noise that is a consequence of his hearing loss. In addition to reducing audibility, hearing loss may degrade the fidelity of the sensory encoding of supra-threshold (audible) stimuli [2]. Such supra-threshold deficits influence the ability to filter out competing sounds from the voice of interest [3].

The second issue for the husband is inadequate understanding. Language comprehension is a high-level cognitive function that requires the brain to identify individual words, establish structural relations between the words, and integrate this material into meaning. Older adults have more difficulty in understanding than younger adults when speech flows rapidly and near-continuously [4, 5], as ageing is typically accompanied by a reduction in the ability to hold and simultaneously manipulate information in immediate memory (i.e. working memory [6]). Such effects of age-related decline in cognitive processing can be exacerbated by degraded auditory processing, making understanding difficult in complex everyday settings.

Traditionally, speech communication has been assessed clinically with speech intelligibility tests, which typically present consecutive sentences interrupted by a silent period when listeners repeat the words. The results of such tests quite accurately reflect changes in the audibility of speech information, and consequently they have been useful tools for assessment of hearing loss and hearing aids. However, these tests do not require listeners to engage in important features of everyday communication scenarios, such as dealing with a continuous flow of information, the need to understand and interpret the information, flexibility in setting goals to monitor multiple talkers or to switch from one talker to another. Particularly for older listeners with hearing loss, these features of natural communication place greater demands on the cognitive system – on sustained attention and working memory capacity – than do current clinical speech tests. Therefore, it is not surprising that the benefit of wearing hearing aids as measured by improvement on typical speech tests does not always predict success with hearing aids in everyday life.

“Listening comprehension is tested by asking questions about the content of the stories.”

We have embarked on creating a new speech test that should better capture the processing demands on the brain in real communication situations. The idea is that it might better predict the real-world performance of listeners. In our test [7, 8], the listener is surrounded by a circle of loudspeakers and the voices of different talkers, each of whom is narrating a different story, are simultaneously presented from different locations. Listening comprehension is tested by asking questions about the content of the stories. We assessed listeners immediately after the occurrence of the relevant information in the story in order to reduce demands on memory. There were two answer choices for each question. In the ‘semantic condition’, one answer choice paraphrased the original material so listeners had to ‘listen to the meaning’ to get the correct answer. The other answer choice was unrelated to the meaning of what had been said. We also tested the performance in a ‘phonetic’ condition in which original material was used verbatim in response choices, so listeners could get the correct answer by only ‘listening to the words’ without needing to understand the meaning.

We have quantified the extra cognitive demands of understanding speech relative to only identifying speech sounds by testing young normal-hearing adults using two challenging tasks that capture the dynamic aspects of real-world communications. In one task [7], which required the communication skill of dividing attention between talkers, listeners were encouraged to not only pay attention to the target talker but to also eavesdrop on the other talkers if they could. In the phonetic condition, listeners were able to hear some words from competing talkers with no effect on their ability to respond to the words from the target talker, which suggests that listeners are able to simultaneously maintain and access the sensory trace of phonetic information from more than one talker. In the semantic condition, listeners were either unable to report any information from competing talkers or they showed a performance tradeoff between understanding the target talker and understanding the competing talker. We think that the higher cognitive demands of understanding speech compared to simply repeating words reduced the listener’s ability to simultaneously process what was being said by the other talkers.

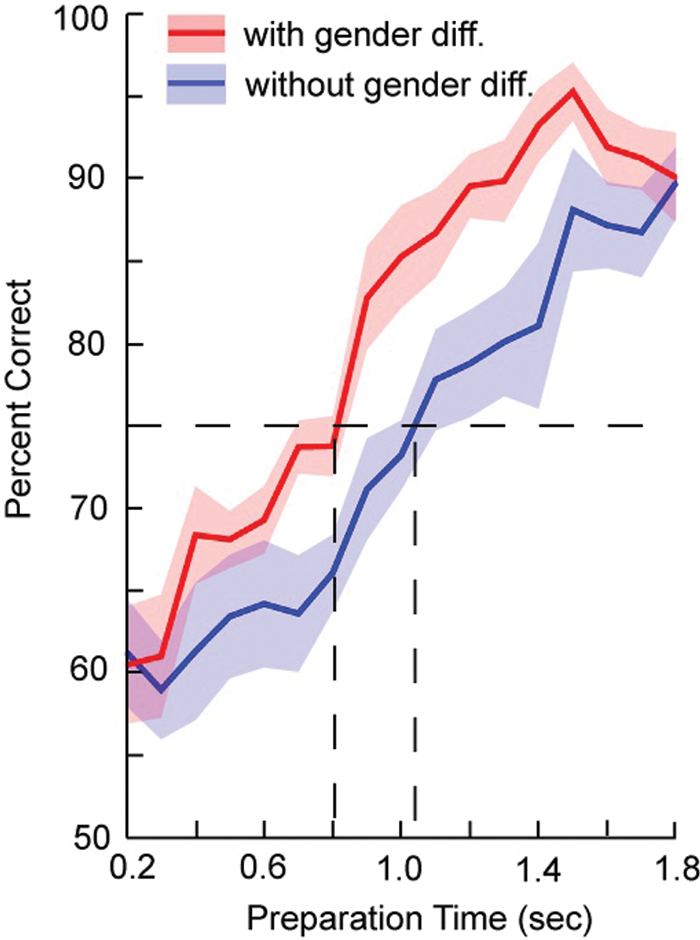

In the other task [8], which required the skill of rapidly switching attention between talkers, a visual display designated one of the talkers as the source for the next question. From time to time, listeners were cued visually to switch attention to the newly designated target talker. We measured performance as a function of preparation time (the interval between the moment when the cue to switch was displayed and the moment when the information relevant to the next question occurred in the story). In the phonetic condition, listeners needed about 0.6 second preparation time to answer 75% of the questions correctly. In contrast, listeners needed about twice as much preparation time to understand the new target talker in the semantic condition. The longer preparation time for switching attention to a new target in the semantic condition indicates longer processing time in understanding speech than in identifying speech. Again, we think that the findings reflect the high cognitive demands of understanding speech and illustrate deficiencies in evaluations of real-world communication using speech tests that do not require understanding meaning.

Figure 1: Subject performance on switching attention task. Three talkers from different locations in the frontal horizontal plane spoke three different stories simultaneously. When the talker at centre was male and the talkers on the sides were female (red), listeners needed about 0.8 second preparation time to switch attention effectively enough to get 75% questions correct from the new target story. This was shorter than the approximately 1 second preparation time needed to switch attention among three female talkers (blue).

Can manipulation of auditory stimuli improve cognitive processing? To answer this question, we measured how quickly young normal-hearing adults can switch attention between three female talkers or between one male and two female talkers in the semantic condition. The gender difference was a convenient way to assess if an acoustical change would affect performance on a cognitively demanding task. We found that listeners were better at switching to the new target when there was a gender difference between talkers (Figure 1), suggesting that acoustic differences that enable better segregation of voices can facilitate performance on the challenging cognitive tasks we often encounter in everyday life such as comprehending speech while flexibly deploying attention.

Tests such as those we are developing could be used to better understand the interaction between auditory and cognitive processing that people who are hard of hearing experience in many everyday listening situations. Such tests may also offer a better way to evaluate the influences of hearing aid technologies on real-world communication in laboratory or clinical settings. We hope our new speech test featuring the cognitive load typical of everyday listening can be helpful in developing hearing aid technologies to help the husband in the vignette at the beginning of the article not just hear his wife better but also understand her better.

References

1. Pichora-Fuller MK. Effects of age on auditory and cognitive processing: implications for hearing aid fitting and audiologic rehabilitation. Trends Amplif 2006;10:29-59.

2. Moore BC. Perceptual consequences of cochlear hearing loss and their implications for the design of hearing aids. Ear Hear 1996;17:133-61.

3. Shinn-Cunningham BG, Best V. Selective attention in normal and impaired hearing. Trends Amplif 2008;12:283-99.

4. Wingfield A. Cognitive factors in auditory performance: context, speed of processing, and constraints of memory. J Am Acad Audiol 1996;7:175-82.

5. Schneider BA, Daneman M, Pichora-Fuller MK. Listening in aging adults: from discourse comprehension to psychoacoustics. Can J Exp Psychol 2006;56:139-52.

6. Salthouse TA. The aging of working memory. Neuropsychology 1994;8:535-43.

7. Hafter ER, Xia J, Kalluri S. A naturalistic approach to the cocktail party problem. Adv Exp Med Biol 2013a;787:527-34.

8. Hafter ER, Xia J, Kalluri S, et al. Attentional switching when listeners respond to semantic meaning expressed by multiple talkers in Proceedings of Meetings on Acoustics 2013b;19:050077.

Declaration of Competing Interests: JX, SK and BE areemployed at Starkey Hearing Research Center.