Steve Bell is a lecturer at the University of Southampton and a member of the British Society of Audiology’s (BSA) Special Interest Group in Electrophysiology. Given the current surge in interest in electrophysiology, both in rehabilitation and diagnostic arenas, Steve was invited to give some thoughts on new directions in electrophysiology, focussing primarily on Clinical applications of auditory electrophysiology but also touching on a surprising link between electrophysiology and telepathy research.

To understand where we are going it helps to have some idea of where we have come from: back in 1929, Hans Berger first documented neural potentials from the scalp. It is thought that Berger was actually searching for a source of telepathy following some strange personal events. Sadly Hans never proved telepathy, but his discovery of the electroencephalogram (EEG) had great impact in the field of neurology. Shortly after, in 1939, Pauline Davis demonstrated that the human EEG altered with auditory stimulation, an early demonstration of an auditory evoked potential.

A problem was (and still is) that the electrical signals generated in the auditory system are small compared to the electrical activity from the rest of the brain and body, so they are challenging to measure. The 1950s and 60s saw the development of computer-based technology for coherent averaging of brain responses (which reduces interfering activity so that the response is seen more clearly). Improvements in measurement technology led to a rapid discovery of different evoked potentials. The auditory late response was initially used for clinical measurements of hearing threshold, although this was superseded for clinical measurement by the auditory brainstem response, which is smaller but has the advantage of being unaltered by attention so it can be used on sleeping infants.

“In 1939, Pauline Davis demonstrated that the human EEG altered with auditory stimulation, an early demonstration of an auditory evoked potential.”

More recently, the response of the brain to modulations in a signal have been discovered at Terrence Picton’s lab (and by parallel Australian researchers), the so called ‘steady state’ responses. These are only tens of billionths of a volt in size. It is fascinating that by simply putting a few electrodes on the scalp, it is possible to record such tiny signals from the brain. Nonetheless these methods are now widely used for clinical measurements, in some countries in preference to the auditory brainstem response.

Arguably the biggest impact of evoked response measurement for hearing care has been the introduction of neonatal hearing screening. The Wessex trial [1] demonstrated that otoacoustic emission and evoked response testing combined is highly sensitive to detect infant hearing problems. Hearing thresholds can then be measured with brainstem responses within weeks of birth. In the UK this led to the introduction of universal screening. As a consequence, the average age of a child with hearing impairment being fitted with a hearing aid has fallen from well over a year to around three months of age. Evidence suggests that early intervention maximises the ability of a hearing impaired child to develop speech and language. However it has led to a new challenge: although we can detect hearing loss and fit a hearing aid by a few weeks of age, how do we know that the hearing aid is working? Such young babies do not respond to sounds consistently and we can’t ask them how a hearing aid sounds.

One approach to try and evaluate an infant’s hearing aid is to measure auditory late responses to speech sounds. A challenge with doing this is that the late response is attention dependent and infants have variable attention. However Suzanne Purdy and colleagues showed that with the appropriate stimulus to control attention, late responses can be recorded in infants.

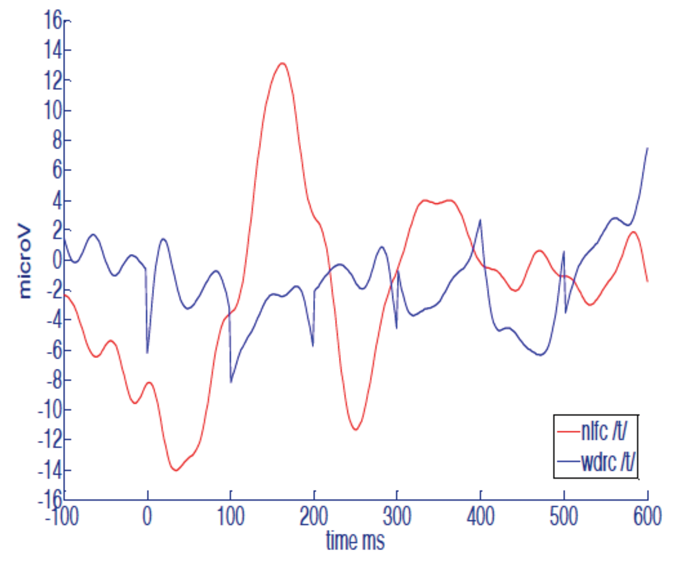

Before a method can be accepted for clinical use, issues such as reliability need to be evaluated. Currently a clinical trial in Australia is evaluating the use of cortical evoked responses in infant hearing aid fitting. Auditory late responses may be able to demonstrate the benefit of frequency compression for infants (Figure 1). Manchester University are currently funded by the National Institute of Health Research to explore the reliability and acceptability to parents of cortical evoked responses to test infants with hearing impairment. Soon we hope to have a better understanding of how the auditory late response may fit into the care pathway of the hearing impaired child.

Figure 1. Example auditory late responses recorded from a hearing impaired infant to the sound, ‘t’. The blue line is with no frequency compression. The red line with frequency compression activated in the hearing aid. The response appears to increase with frequency compression activated. This may indicate that frequency compression is allowing the brain access to the high frequency information in /t/speech sound. Data from the thesis of Katie Ireland, University of Southampton, 2013.

A technical issue is that these cortical responses are evoked by short repeating speech tokens (e.g. /da/) but modern hearing aids are complex non-linear devices that may not respond in the same way to short sounds as to running speech. Ideally we would like to play running speech and show that this activates the brain of someone with a hearing aid or cochlear implant, to demonstrate that they have access to speech.

At a recent conference Terry Picton made a call to hearing researchers to move towards measuring evoked responses to speech. For running speech we lose the benefit of signal averaging to improve data quality, which is a challenge. However extending the steady state measurement approach to speech appears promising. David Purcell has developed short repeating sentences where the brain response to each acoustic feature in the sentence can be measured [2]. Use of this approach for clinical hearing aid evaluation is under investigation. In line with Picton’s suggestion, in Southampton we are funded by EPSRC Grant EP/M026728/1 in order to explore how brain responses to speech might be used to evaluate and adjust hearing aids to optimise fittings for individuals.

“Arguably the biggest impact of evoked response measurement for hearing care has been the introduction of neonatal hearing screening.”

The current drive towards wearable tech may also have clinical application. Danilo Mandic and colleagues have shown that EEG signals can be measured from electrodes embedded in an earmould (Figure 2). This raises the possibility that a hearing aid could in principle measure the brain response of a user and test if speech signals received at the hearing aid microphone give a corresponding response in the brain of the user, or automatically adjust itself if not. An interesting by-product of ear level EEG is that heart activity and other biomedical signals can be measured from such sensors, which raises the possibility of a hearing aid becoming a personalised health monitor.

Figure 2. EEG embedded in an earmould.

From www.technologyreview.com

Moving from the lab to clinical application is always a challenge. One application of auditory evoked potentials that has been recently demonstrated in the lab is to track auditory attention [3]. This raises the prospect of a brain-controlled hearing aid that can steer itself to what the user wants to listen to. The European Union currently funds the ‘COCOHA’ project exploring this possibility, although a wearable device may be some way off.

These are exciting times in auditory electrophysiology. I hope I have given a feel for some research areas which could be near to having clinical impact and some which are more focussed on the horizon. It is not yet clear if we have reached the limits of what can be measured or understand exactly what can be translated for clinical use. Although of course it is important to be pragmatic about what will really help patients. At some point we reach the realm of science fiction. Possibly we already have: last year scientists in Washington demonstrated direct brain to brain communication by transmitting the EEG of one subject on an object detection task to another via magnetic brain stimulation. The second subject chose objects correctly a lot better than by chance [4]. Brain to brain communication is telepathy, by other means. If only Hans Berger had been around to see it.

References

1. Lutman ME, Davis AC, Fortnum HM, Wood S. Field sensitivity of targeted neonatal hearing screening by transient-evoked otoacoustic emissions. Ear and Hearing 1997;18:265-76.

2. Easwar V, Purcell DW, Aiken SJ, et al. Effect of Stimulus Level and Bandwidth on Speech-Evoked Envelope Following Responses in Adults With Normal Hearing. Ear Hear 2015;36(6):619-34.

3. Choi I, Rajaram S, Varghese LA, Shinn-Cunningham BG. Quantifying attentional modulation of auditory-evoked cortical responses from single-trial electroencephalography. Front Hum Neurosci 2013;4(7):115.

4. Stocco A, Prat CS, Losey DM, et al. Playing 20 Questions with the Mind: Collaborative Problem Solving by Humans Using a Brain-to-Brain Interface. PLoS One 2015;10(9):e0137303.

Declaration of Competing Interests: None declared.