Advances in navigation and augmented reality are transforming skull base surgery, offering greater precision and safety alongside emerging robotic tools.

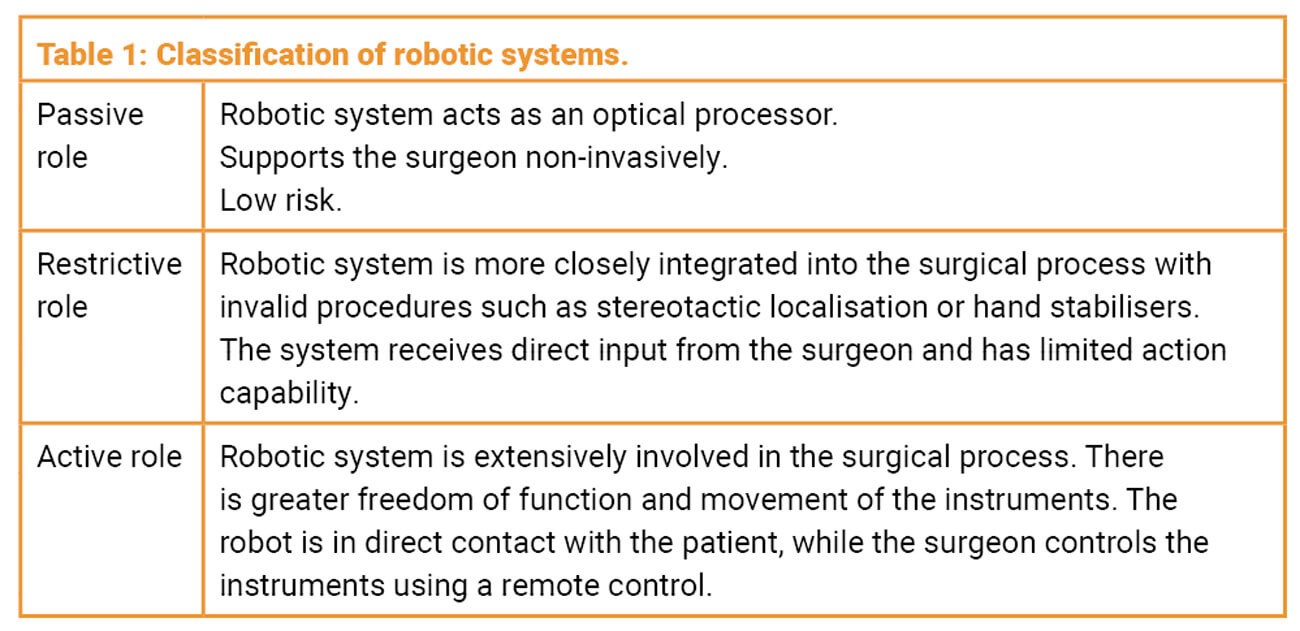

Surgical robots have been used in various forms across several surgical specialties for over 20 years [1,2]. However, it is only relatively recently that they have been routinely used in otorhinolaryngology. Surgeons need a basic understanding of the general principles of robotics to grasp and utilise the tasks and complexity of robotic surgical systems. A role-based classification of robotic systems facilitates the necessary interdisciplinary dialogue between engineers and physicians (Table 1) [3].

In this classification, passive systems correspond to modern platforms that present the surgical field to the surgeon in an optimised view. This category also includes the surgical navigation systems already used in many specialties, known as ‘image-guided surgery’ (IGS). Semi-active systems enable limited actions of instruments or imaging components – for example, memory or stored-position functions (ZEISS Kinevo 900, Carl Zeiss Meditec AG, Germany). Active systems – current surgical robotic systems – play a direct role in the surgical application process. Here, the robot is in contact with the patient while the surgeon controls the instruments via a remote console (Figure 1).

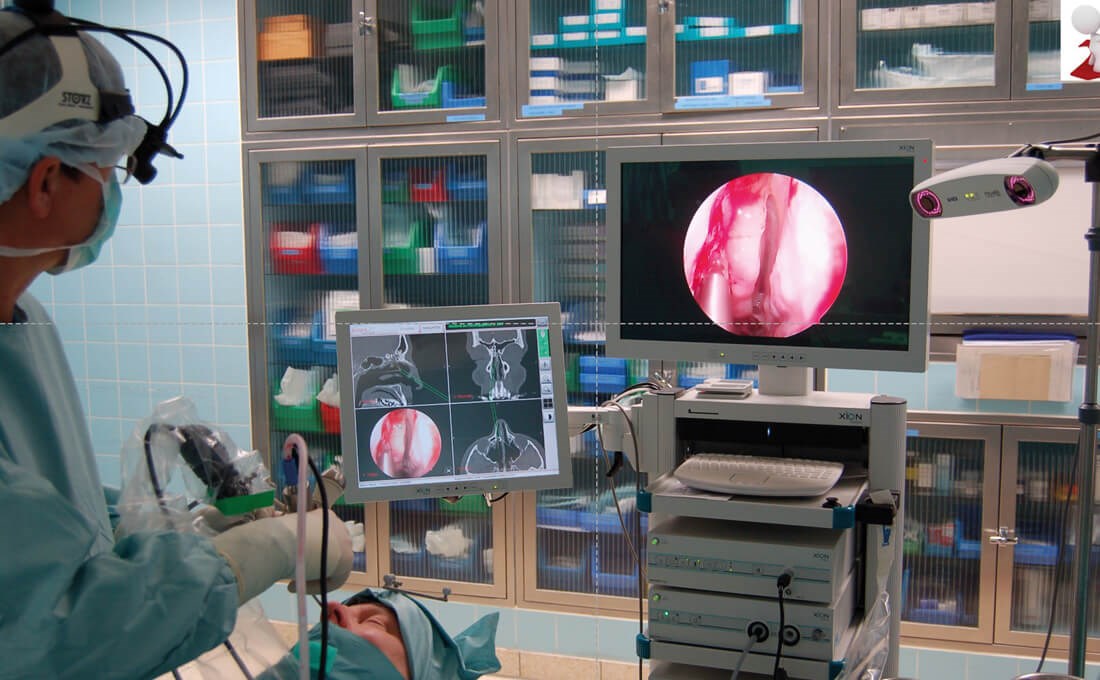

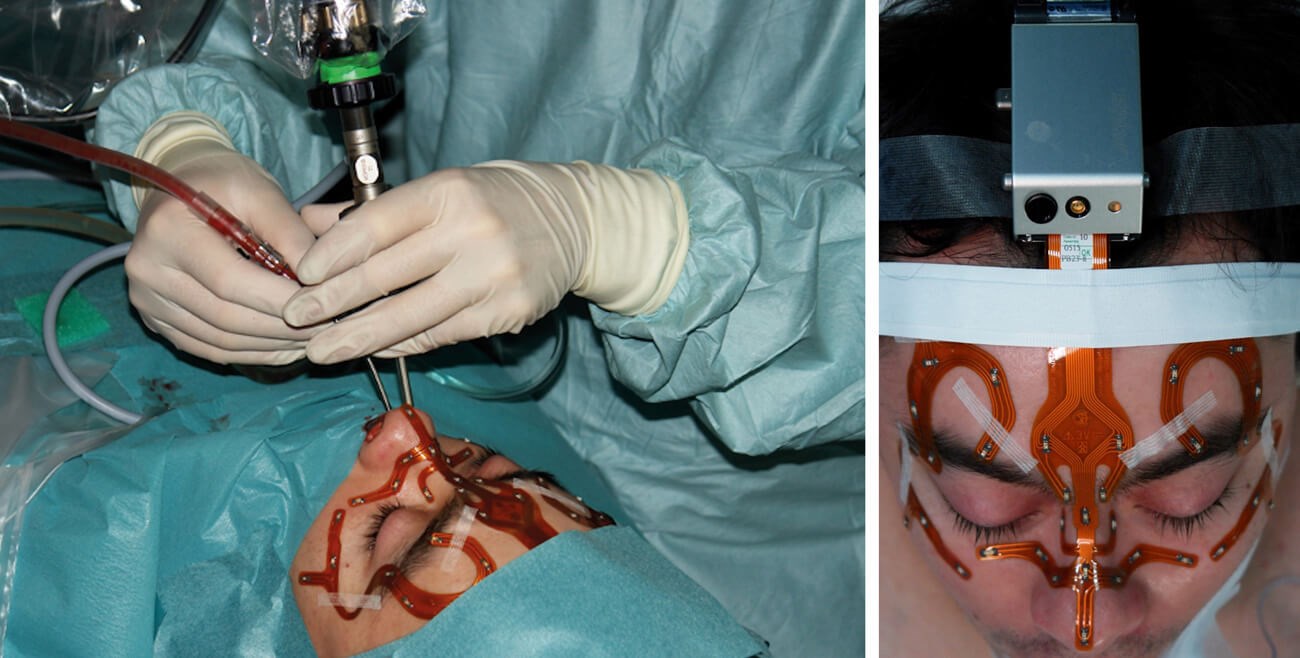

Figure 1: Use of an optical navigation system (Scopis®) during paranasal sinus surgery. The infrared camera receives the reflections of the markers on the patient’s head and on the instruments. (© Oliver Kaschke)

With increasing size and complexity of surgical telerobotic systems, the robot becomes a complete interface between surgeon and patient: no longer merely ‘connected’, but ‘part of’ the surgical process. The surgeon’s ‘master’ hand is converted into a digital signal and transmitted to the robotic arm, where movements are executed accordingly.

One particular advantage of robots is the increased manoeuvrability of surgical instruments in confined spaces and along non-linear trajectories – i.e. when they are no longer in the surgeon’s direct line of sight. Conventional endoscopic and microscopic procedures in otorhinolaryngology and other fields generally offer four degrees of freedom. In contrast, robotic surgical systems such as the da Vinci (Intuitive Surgical, USA) offer six degrees of freedom. This added articulation has potential advantages in small, narrow anatomical regions, which are common in ENT surgery [3].

While robotics addresses how instruments can be moved with greater dexterity, navigation / IGS addresses where those instruments are in relation to patient anatomy. Intraoperative navigation or image-guided surgery – considered here as a primarily passive technology within the broader landscape of surgical systems – is an important pillar of modern skull base and sinus surgery. Based on data sets from various imaging modalities – mostly CT – IGS has been continuously developed since the early 1990s. In recent years, various systems have become established on the market, characterised above all by easier handling and reliability in use [4].

Figure 2a and b: Optical navigation system for transnasal pituitary surgery (Stryker Stealth®). The light-emitting diodes attached by means of a mask are the reference points for the camera. (© Oliver Kaschke)

Today, almost every ENT clinic in economically more developed countries has access to such an IGS system in the operating theatre. These systems have proved their worth in sinus surgery in particular, especially in difficult cases involving tumour processes, post-traumatic conditions or revision operations. The principle is based on continuous registration of a tracked instrument moving in a defined space. A distinction is made between optical systems (Figure 1), in which infrared cameras record signals from LEDs or reflective spheres on the patient and on the instruments (Figure 2a and b), and electromagnetic systems, in which the movement of a sensor embedded in the instrument is detected within a magnetic field generated by the device. Both techniques achieve comparable precision and accuracy in clinical application. Prerequisites include precise tracking of instruments in space (<1 mm) and robust patient registration, which in turn requires sufficiently high imaging resolution (approximately 0.5 mm slices). At present, optimal patient registration is most reliably achieved with bone-anchored markers. However, combinations of mask-based and surface registration offer sufficiently high accuracy for interventions at the skull base. Current work focuses on alternatives to conventional surface registration, aiming to improve accuracy, particularly in deep structures [5].

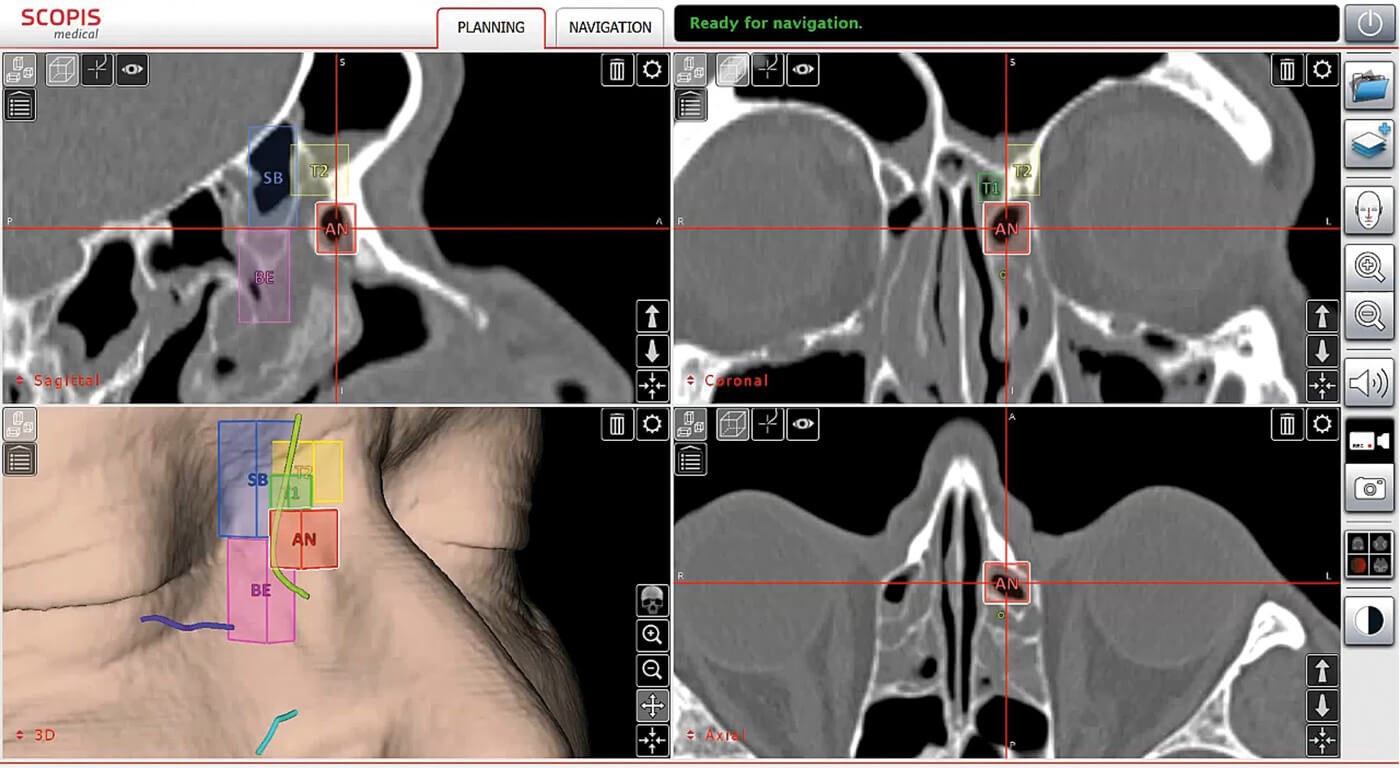

Figure 3: Use of the TGS software to analyse the individual anatomy of pneumatised sections of the paranasal sinuses and for planning paths for surgery (Scopis®). The International Classification of Sinus Anatomy based on Wormald’s Building Block System is used [10]. (© Oliver Kaschke)

A real innovation in IGS has been the implementation of new software functions within navigation systems. Using dedicated graphical elements in the 3D CT or MRI data set, it is now possible to mark anatomical structures and perform formalised preoperative planning (Figure 3). Anatomical classification systems can be used to systematise preoperative analysis and help minimise risk during surgery [6]. Trajectories (surgical access corridors) and danger zones can be defined during planning and then visualised intraoperatively on the monitor within the endoscopic image. This ‘augmented reality (AR)’ capability was realised, for example, with the navigation system developed by Scopis (Germany; Figure 4).

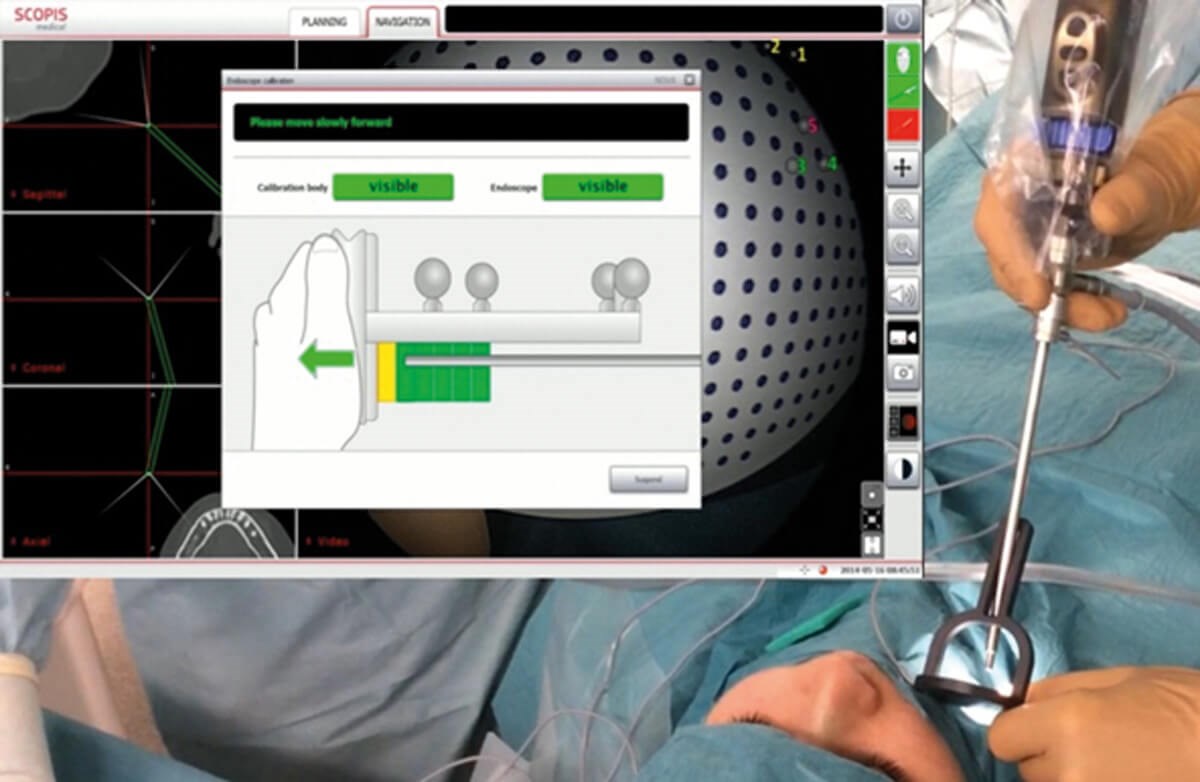

Figure 4: Calibration of the endoscope as a prerequisite for using TGS navigation. The endoscope is located in a calibrator which is referenced by image analysis. (© Oliver Kaschke)

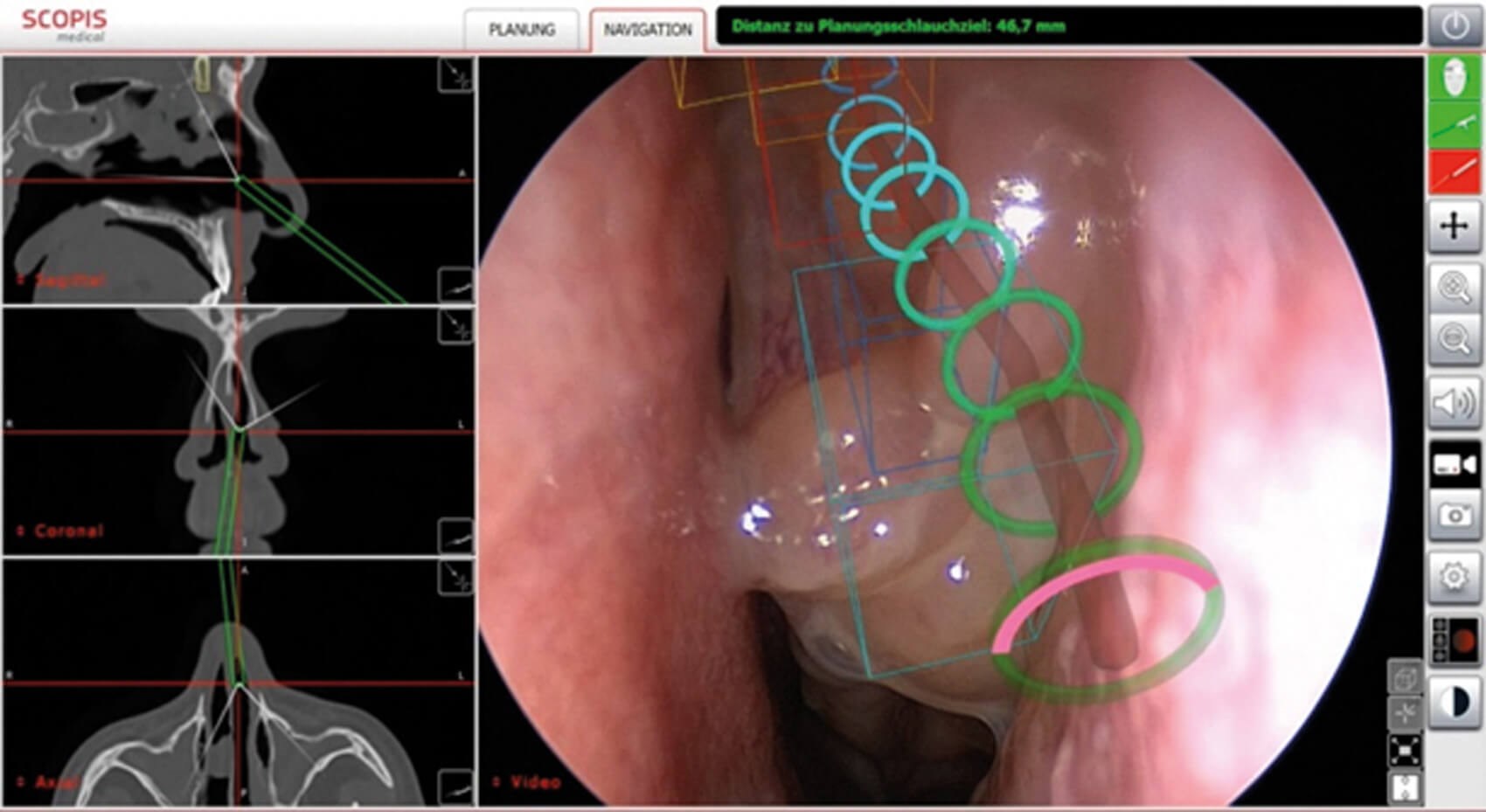

Reliable clinical application is supported by sensors attached near the distal end of instruments, enabling intuitive visualisation of instrument guidance in regions that are difficult to access. By combining software-based analysis of the patient’s individual anatomy with the concurrent definition of target structures using 3D graphic elements, optically controlled guidance can be achieved (Figure 5). Conceptually, this extends navigation to ‘target-guided surgery’ (TGS). Under the leadership of Stryker (USA), further development and commercialisation of the Scopis system is now being realised.

Figure 5: Intraoperative visualisation of TGS navigation during endoscopic paranasal sinus surgery. The projection of the preoperatively analysed anatomical structures (building blocks) and the surgical path (target guidance) are visible. The navigated instrument (e.g. suction cup) can be used to visualise the exact position in the target guidance path in the endoscope image. (© Oliver Kaschke)

Evidence on AR in ENT and related fields has expanded in recent years [7]. Demonstrating that navigation techniques improve hard surgical outcomes remains challenging due to heterogeneous data and the large sample sizes required. For example, statistically reliable proof of a reduction in complications attributable to navigation alone would require a cohort of approximately 35,000 patients in a prospective design [8]. Alongside concerns about potential increases in operating time, intraoperative observations often cite advantages such as more precise and complete surgery and adjustments in surgical strategy guided by improved orientation. Clinical studies in navigation-assisted paranasal sinus surgery, including a meta-analysis [9], have shown demonstrable advantages of navigation for the occurrence of all complications (OR = 0.58), and particularly for serious complications (OR = 0.36) and orbital complications (OR = 0.38).

No clear effects were observed on postoperative bleeding, minor complications or revision rates [10]. Other measured outcomes include surgical volume and surgeon-reported experience: the use of navigation was associated with more extensive surgery in 81% of patients (notably, identification and opening of additional cells). Reported satisfaction with the system was high (8.6/10), and 95% of surgeons described a positive impact on intraoperative stress [11]. In a prospective, randomised study comparing AR-enhanced navigation with conventional navigation for paranasal sinus surgery, all surgeons reported an advantage with AR; navigation was used more frequently, and more time was invested in preoperative image analysis. There were no differences in postoperative complications, duration of surgery or postoperative recovery [12].

Despite the positive arguments in favour of existing robotic and navigation systems, key technological questions remain and further development is required. In robotics, advancing instrument guidance may enable more precise techniques with lower morbidity, though the lack of haptic feedback in large robotic platforms continues to pose a challenge and necessitates an appropriate learning curve for surgeons. In navigation / AR the intraoperative identification and visualisation of neighbouring neurovascular structures can improve access and resection of target tissues, but realising this reliably depends on robust correspondence between preoperative and intraoperative image data. This, in turn, requires effective registration algorithms, ideally operating in real time with reliable modelling of intraoperative deformations as anatomy changes during the procedure.

In summary, robotics and navigation serve complementary roles in skull base surgery: robotics primarily enhances manoeuvrability and instrument control, while navigation and AR primarily enhance spatial orientation and execution of a preplanned strategy. Their thoughtful integration – supported by accurate registration, high-quality imaging and disciplined workflow – offers a path toward safer, more predictable surgery with reduced avoidable risk.

References

1. Nifong LW, Chu VF, Bailey BM, et al. Robotic mitral valve repair: experience with the da Vinci system. Ann Thorac Surg 2003;75(2):438–42.

2. Tewari A, Kaul S, Menon M. Robotic radical prostatectomy: a minimally invasive therapy for prostate cancer. Curr Urol Rep 2005;6(1):45–8.

3. Camarillo DB, Krummel TM, Salisbury Jr JK. Robotic technology in surgery: past, present, and future. Am J Surg 2004;188(4ASuppl):2S–15.

4. Citardi MJ, Yao W, Luong A. Next-Generation Surgical Navigation Systems in Sinus and Skull Base Surgery. Otolaryngol Clin North Am 2017;50(3):617–32.

5. Grauvogel TD, Engelskirchen P, Semper-Hogg W, et al. Navigation accuracy after automatic- and hybrid-surface registration in sinus and skull base surgery. PLoS One 2017;12(1):e0180975.

6. Wormald PJ, Hoseman W, Callejas C, et al. The International Frontal Sinus Anatomy Classification (IFAC) and Classification of the Extent of Endoscopic Frontal Sinus Surgery (EFSS). Int Forum Allergy Rhinol 2016;6(7):677–96.

7. Wong K, Yee HM, Xavier BA, Grillone GA. Applications of Augmented Reality in Otolaryngology: A Systematic Review. Otolaryngol Head Neck Surg 2018;159:956–67.

8. Smith TL, Stewart MG, Orlandi RR, et al. Indications for image-guided sinus surgery: the current evidence. Am J Rhinol 2007;21(1):80–3.

9. Dalgorf DM, Sacks R, Wormald PJ, et al. Image- guided surgery influences perioperative morbidity from endoscopic sinus surgery: a systematic review and meta-analysis. Otolaryngol Head Neck Surg 2013;149(1):17–29.

10. Vreugdenburg TD, Lambert RS, Atukorale YN, Cameron AL. Stereotactic anatomical localization in complex sinus surgery: A systematic review and meta-analysis. Laryngoscope 2016;126(1):51–9.

11. Vicaut E, Bertrand B, Betton JL, et al. Use of a navigation system in endonasal surgery: Impact on surgical strategy and surgeon satisfaction. A prospective multicenter study. Eur Ann Otorhinolaryngol Head Neck Dis 2019;136(6):461–4.

12. Linxweiler M, Pillong L, Kopanja D, et al. Augmented reality-enhanced navigation in endoscopic sinus surgery: A prospective, randomized, controlled clinical trial. Laryngoscope Investig Otolaryngol 2020;5(4):621–9.

Declaration of competing interests: None declared.

Oliver Kaschke will present on this topic at the 20th ENT Masterclass® in January 2026.